Sep 4, 2024

Deploying AKS 2 – Creating and Configuring Containers

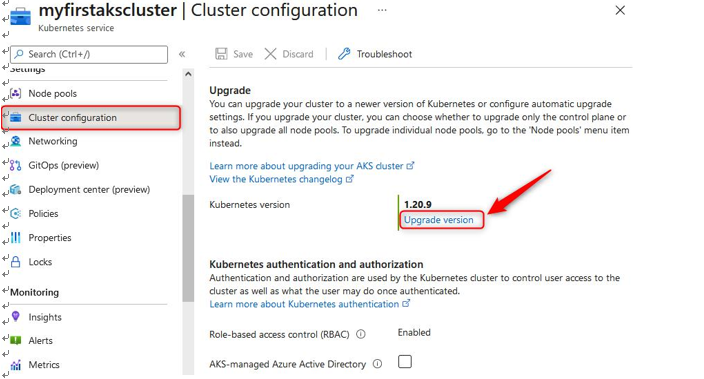

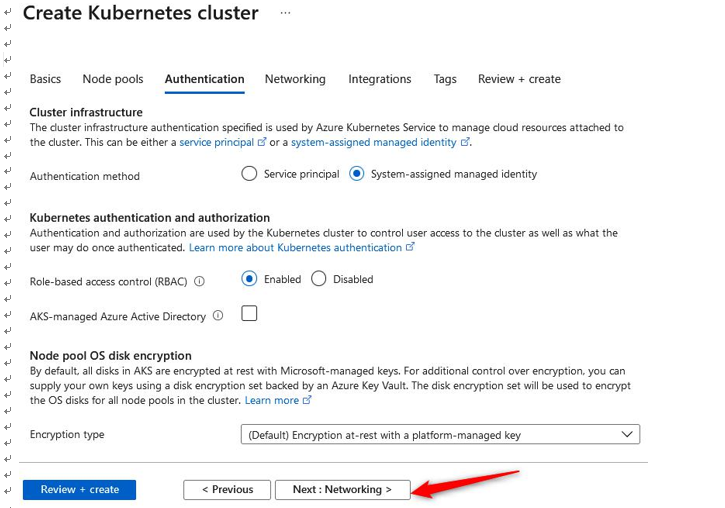

- Next, we have the Authentication tab; here, you will note we can modify the setting for the Authentication method type to either Service principal or System-assigned managed identity. This will be used by AKS for managing the infrastructure related to the service. For this exercise, we will leave this at its default setting. Then, you have the Role-based access control (RBAC) option. By default, this is set to Enabled; this is the best option to manage the service as it allows fine-grained access control over the resource; leave this as Enabled. You will also have the choice to enable AKS-managed Azure Active Directory. Checking this will enable you to manage permissions for users on the service based on their group membership within Azure AD. Note that once this function has been enabled, it can’t be disabled again, so leave this unchecked for this exercise. Finally, you have the option of the Encryption type value you want. For this exercise, leave it as the default setting. Click Next: Networking >. The process is illustrated in the following screenshot:

Figure 11.47 – Creating a Kubernetes cluster: Authentication tab

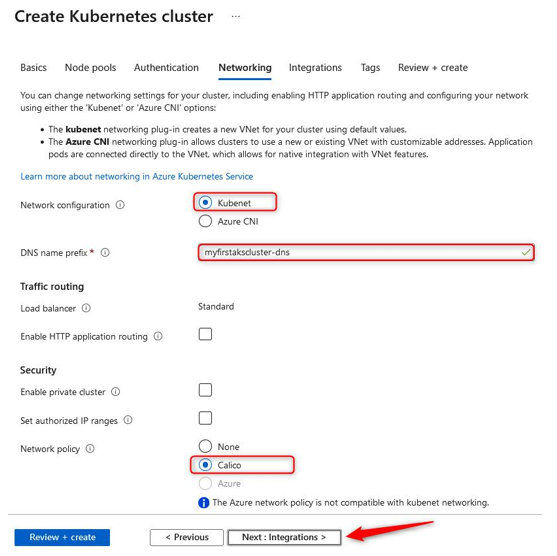

- For the Networking section, we will leave most of the settings as their default configuration. Note that for Network configurations we have two options here, one for Kubenet and another for Azure CNI. kubenet is a new VNet for the cluster whereby Pods are allocated an IP address and containers have network address translation (NAT) connections over the shared Pod IP. Azure Container Networking Interface (Azure CNI) enables Pods to be directly connected to a VNet. In association, this allows containers to have an IP mapped to them directly, removing the need for NAT connection. Next, we have the DNS name prefix field, which will form the first part of your FQDN for the service. You will then notice Traffic routing options available to us for the service—we will discuss this more in one of the next exercises, as well as the Security options available to us. Select Calico under Network policy. Click Next: Integrations >. The process is illustrated in the following screenshot:

Figure 11.48 – Creating a Kubernetes cluster: Networking tab

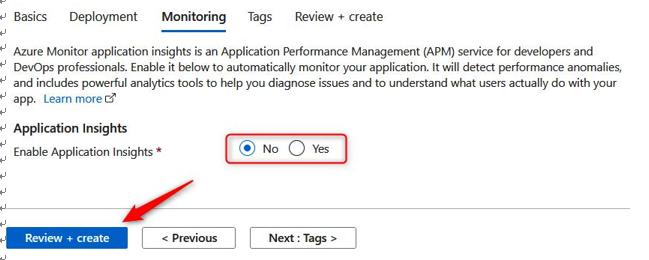

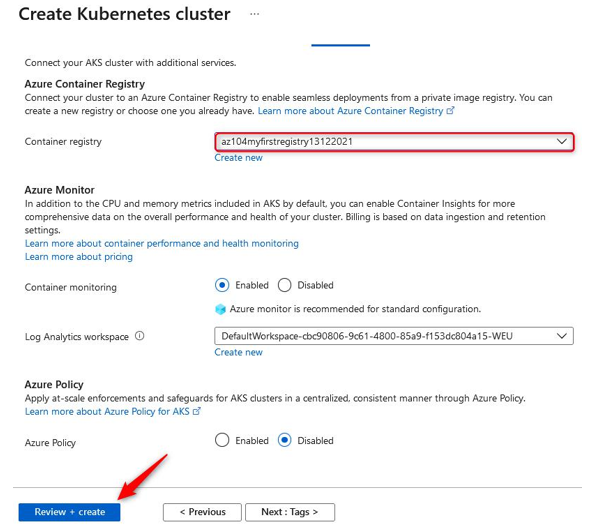

- On the Integrations tab, you will note the option to select a container registry. We will select the registry that we previously deployed. You will also note you have the option to deploy a new registry directly from this creation dialog. Next, we have the option to deploy container monitoring into the solution on creation. We will leave the default setting here, but monitoring will not be covered under the scope of this chapter. Finally, you have the option of applying Azure Policy directly to the solution; this is recommended where you want to enhance and standardize your deployments. This solution enables you to deliver consistently and control your deployments on AKS more effectively. Click Review + create, then click Create. The process is illustrated in the following screenshot:

Figure 11.49 – Creating a Kubernetes cluster: deployment

You have just successfully deployed your first Kubernetes cluster; you now know how to deploy and manage containers at scale and in a standardized way. Next, we will look at how we configure storage for Kubernetes and make persistent storage available to our solution.

More Details